Custom AI Systems & Research

Exploring new creative paradigms through AI, real-time systems, and speculative design

Overview

My ongoing research focuses on advancing the practical creative applications of AI across image, video, and language models. This work explores how emerging systems: diffusion, generative video, and large language models, are reshaping visual storytelling, authorship, and production itself.

Through systematic experimentation and cross-model analysis, I study the aesthetic and operational potential of these tools: how diffusion models influence visual identity, how AI video models can extend performance and continuity, and how LLMs enable new frameworks for narrative design and creative collaboration.

Ultimately, this body of work aims to define next-generation creative methodologies—bridging artistic experimentation with practical, scalable applications for brands, studios, and storytellers navigating a rapidly changing technological landscape.

Featured Prototypes

AI Photography Prototype

AI image generators are capable of impressive results, but I still find the lack of granular control frustrating. So I set out to recreate the feel of a professional tethered photography workflow—shooting while connected to a computer so the photographer and clients can see images in real time.

This prototype adds a layer of AI mediation to tethered capture. While shooting live, the photographer and clients can use AI to composite the product or subject into entirely new environments on the spot—updating the prompt mid-shoot to effectively change “location.” The photographer retains control over composition, while AI expands what’s possible. Early results are very promising.

Training AI to Match Illustration Style

In collaboration with my colleague, Stephen Halker, (who is a highly talented illustrator), we set out to compose an informal data set of his drawings and use it to train a custom model on their specific illustration style. For model training, we used Adobe Firefly. I was quite impressed with the results. Below you can see samples from the original data set and then AI image generations we created after the model training was complete.

Training Images

Generated Images From the Custom Model

The model did a fairly amazing job of nailing the flat 2-dimensional style, the color palette and the general tone of the training images. And it didn’t hit on every generation, but the hit rate was high enough to be noteworthy. But even more than that, what impressed me was the model’s ability to extrapolate the style to variations on the original dataset. As you can see below, we started to test how far we could take it before it would lose its way.

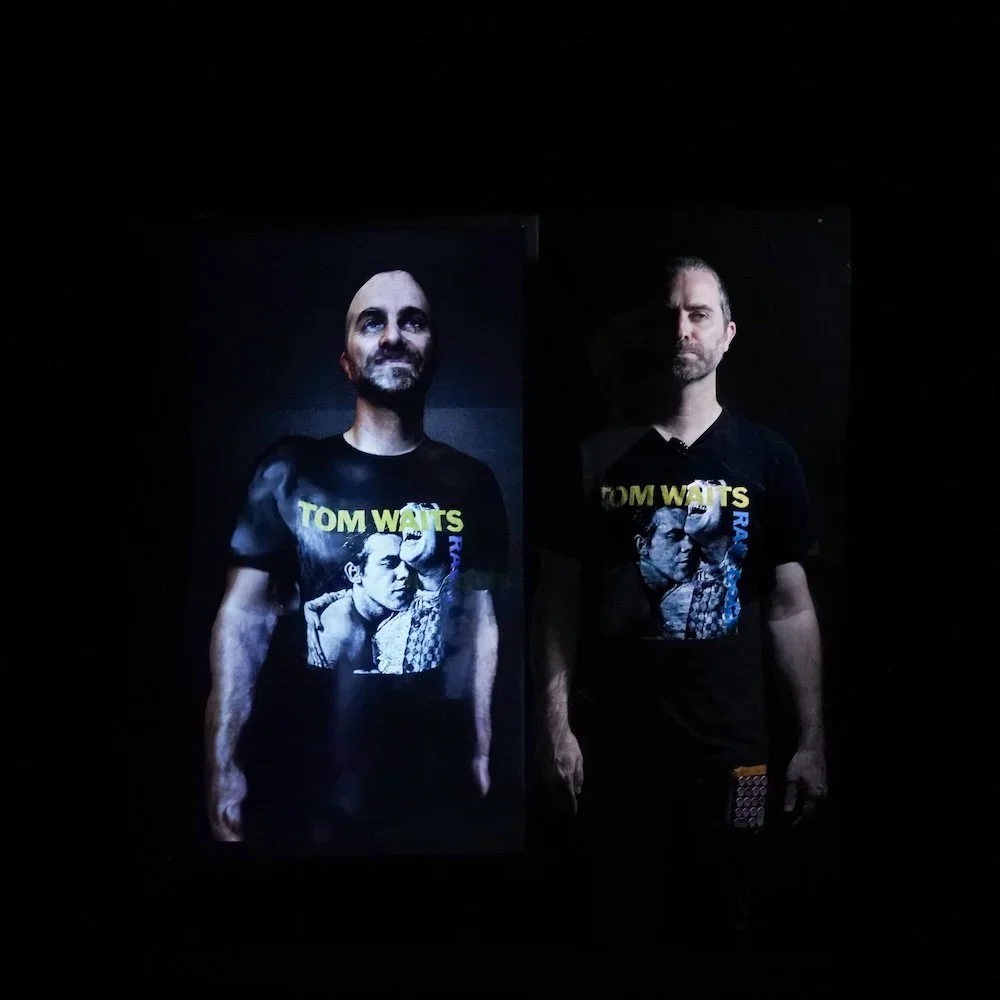

BODY / NOT BODY: A Simulation of Self

A durational performance installation that uses a fine-tuned Large Language Model, voice cloning, and a digital avatar to create a simulation of myself which can be conversed with in realtime. This work explores digital self-representation, post-human embodiment, and the boundaries of authorship in an age of generative media.

Endless Story: A Spoken AI Choose Your Own Adventure

Designed specifically for my 5-year old daughter, Frances, this app is a voice-activated, choose-your-own-adventure web app designed for non-reading children. I wanted to experiment with customizing LLMs to achieve unique results beyond simple chatbots and exploring their generative capacity. Built using the ChatGPT API, the prototype generates unique fairytales in real time, always starring “Princess Frances” and a magical sidekick. The project explores how generative AI can support personalized, spoken storytelling experiences for kids, laying the groundwork for future iterations with customization, real-time responsiveness, and narrative structure.

Unicorn Princess Super Adventure - Retro Platform Video Game

A glitter-drenched, 8-bit fever dream where you can’t lose—only win louder. Built in p5-play, this maximalist platformer flips classic video game logic on its head: every object you collect multiplies, your unicorn grows absurdly large, and eventually the world is swallowed in sparkles. Equal parts Lisa Frank and late-capitalist satire, it’s a joyful, purposeless romp designed to disrupt expectations.

Death Is Not the End - Web Experience

A tongue-in-cheek existential scrollytelling experience featuring rotating 3D skulls, smooth elevator jazz, and uncanny AI-generated compliments made using an AI voice clone of myself. Built with Three.js, GSAP, and ElevenLabs, the piece subverts traditional narrative formats by combining WebGL visuals with surreal affirmation—offering a meditation on death, absurdity, and digital intimacy.

Party Time - Web Experience

A chaotic, joyfully nonsensical browser experience built with WebSockets and dripping in ‘90s clip art and MS Paint energy. Designed for collaborative mayhem, users create audiovisual collages in real time—though the real prize lies in catching the elusive bouncing button. If you can hit it, you’ll be rewarded. Probably.

Final Thoughts

These speculative works are more than experiments—they’re proof-of-concept provocations. They inform my approach to commercial and institutional creative work, giving me firsthand knowledge of the tools, affordances, and cultural implications of emerging technology.

By maintaining an R&D practice rooted in performance, embodiment, and code, I stay fluent in the language of innovation—positioning myself to not just adopt the future of creative work, but help shape it.